#1 | Machines, Emotions, and the ELIZA Effect

👋 Hi harshali! I’m Harshali, and I’m here—

Wait. This is surreal.

Harshali from 2019 would not have believed that she’s getting to talk to you about humans, machines, and emotions.

Fast forward nine years and I’m here in the company of so many of you doing incredible work in the Mental Health space. I couldn’t be luckier :)

Without further ado, let's get into the first edition of TinT, shall we?

Who is ELIZA and what is her effect?

The ELIZA effect refers to the human tendency to unconsciously assume that computer behaviours arise from intelligent or human-like processes.

I know I’m diving into it nose first no context, but stay with me, yes?

As defined by computer scientist Joseph Weizenbaum, it’s the psychological tendency to project agency, empathy, and meaning onto something that is simply processing inputs based on pre-programmed rules.

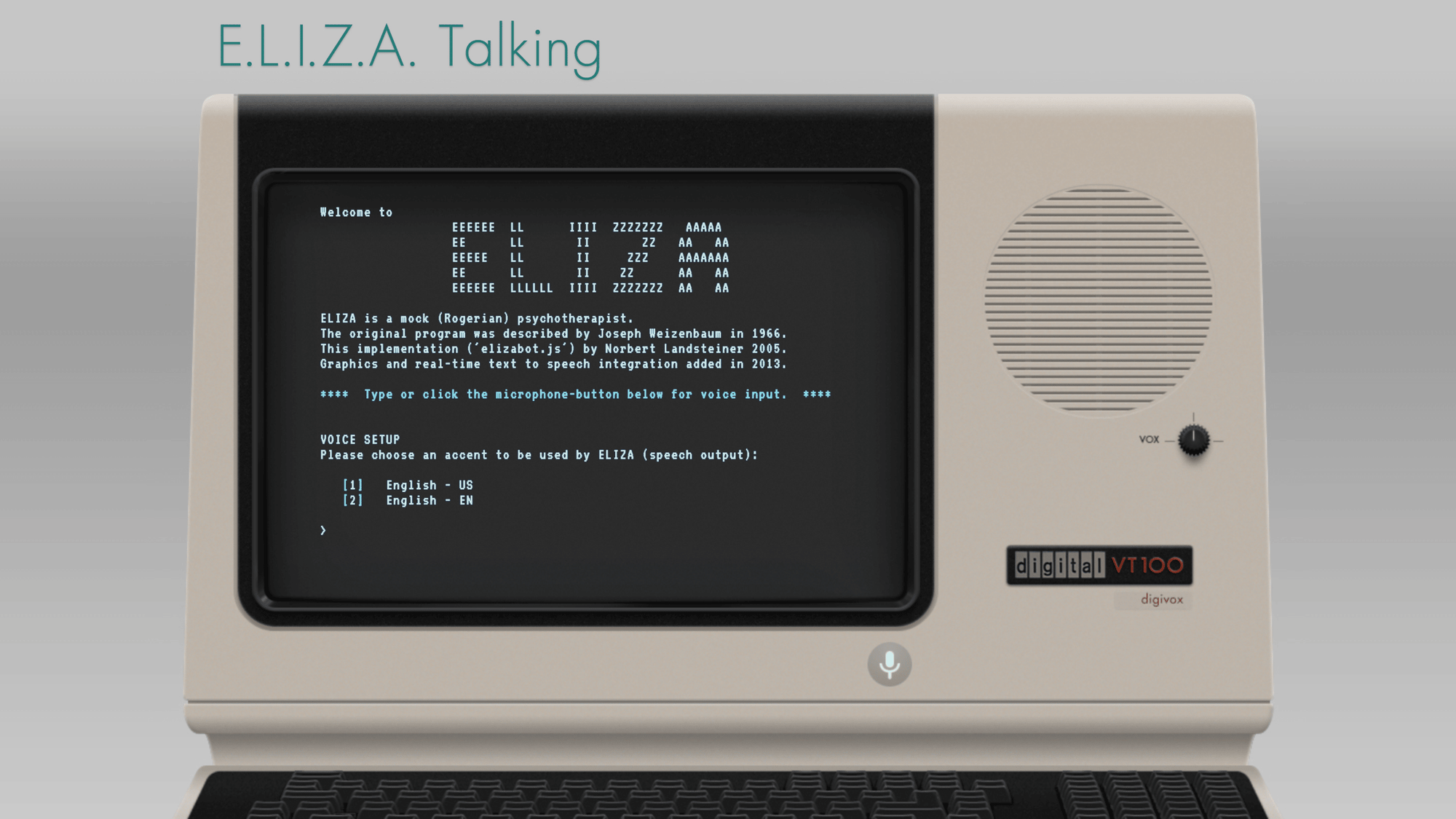

The ELIZA Effect is named after the first ever chatbot ELIZA, developed by Weizenbaum, which became famous for being the first ever ‘therapist bot’.

Weizenbaum was startled at how quickly people began to antromorphize the program, treating it like a trusted confidante (sounds similar?). Reportedly, his own secretary asked to be left alone with ELIZA for an intimate conversation, despite knowing it was a machine.

And therein started the murky, grey dynamic of sharing the stories from our very emotional, vulnerable lives with lifeless machines.

ELIZA’s Origin Story

In 1950, Alan Turing introduced the concept of machine learning in his paper ‘Computing Machinery and In’, which proposed the Turing Test as a way to measure a machine’s ability to exhibit intelligent behaviour indistinguishable from that of a human. This paper went on to become the cornerstone for ML research.

Alan Turing’s work set wheels rolling for ML research and in the following decade, 1966 precisely, an MT researcher named Joseph Weizenbaum, created the chatbot ELIZA. ELIZA was an early natural language processing program that amazed people with its ability to mimic human conversation, even though it had no real understanding of the words it processed.

Wizenbaum built ELIZA to mimic a Rogerian psychotherapist by using simple pattern matching and substitution rules to simulate conversations. For example:

User: I feel sad today

ELIZA: Why do you feel sad today?

User: I don’t know

ELIZA: I see. Please tell me more

This style, reflecting the therapist’s technique of mirroring the client’s statements, gave users the illusion that ELIZA understood them—even though the program itself had no comprehension, believe systems, or emotions.

You can interact with Eliza here masswerk.at/eliza courtesy Norbert Landsteiner. I’d recommend telling ELIZA that you are feeling sad or lonely. Observe the responses.

Why should therapists be aware of the ELIZA effect?

British since fiction writer and future Arthur C. Clarke famously said: “Any sufficiently advanced technology is indistinguishable from magic.”

I remember feeling awe-struck in late 2022 when I interacted with the first model of chatGPT. My head spun with the possibilities. It was like finding a friend, a thinking buddy, a guide, a spell-checker, a personal assistant—all in one!

Three years later, ChatGPT is the largest venue for mental health support in the United States. And that’s just one LLM in a sea of many, in an ocean of products built on LLM.

Understanding the ELIZA Effect helps therapists

- make a case for where to draw the boundary deploying AI for care

- ascertain how AI fits into the care pathways and to what degree it interfaces with clients directly or indirectly

- identify the biases clients might generate when they interact with AI provided assistance

The first edition of TinT opens with ELIZA for a reason

While the discourse around AI in mental healthcare often focuses on its risks and benefits, there's another perspective worth exploring: the evolving relationship between machines and human emotion.

How do we feel when we open up to machines?

What does it mean when machines quietly witness our most vulnerable conversations?

It’s an angle worth pursuing—and a vocabulary worth developing. The ELIZA Effect, I believe, is a powerful first step in that direction.

Closing Notes

Talking about ELIZA without addressing Weizenbaum’s views of AI would be doing his life’s work a disservice. The inventor came to believe that human psyche was so vast and strange that no human could fully understand each other, let alone a machine understand a human. And so at best, machines could trick us into believing that they do understand us, and nothing beyond.

Which leaves me to wonder: all of AI is trying to achieve the illusion of human-to-thuman interaction, following the belief that a computer can and should be made to do everything that a human being can do.

There are tasks–take therapy, or healing–that a machine perhaps could do. But the simple question before us is, should the machine be asked to do it, and to what extent?

I’ll leave it for time to reveal.

That’s all for our first week.

If you find yourself stopping mid-sentence while chatting with ChatGPT, struck with the realisation that you too sometimes, feel the ELIZA effect—write to me. I’d love to know!

Toward more technology-informed therapists!

Harshali

Founder, TinT